Assessing Online Readiness of Students

Raymond Doe

Lamar University

rdoe@lamar.edu

Matthew S. Castillo

Lamar University

mcastillo21@lamar.edu

Millicent M. Musyoka

Lamar University

mmusyoka@lamar.edu

Abstract

The rise of distance education has created a need to understand students’ readiness for online learning and to predict their success. Several survey instruments have been developed to assess this construct of online readiness. However, a review of the extant literature shows that these instruments have varying limitations in capturing all of the domains of student online readiness. Important variables that have been considered in assessing the online readiness of students for distance education include attrition and information and communications technology (ICT) engagement. Previous studies have indicated that high attrition rates for online programs can be prevented by assessing student online readiness. The present study examined undergraduate students’ online readiness using an instrument that was developed by the researchers that included constructs such as information communications technology engagement, motivation, self-efficacy, and learner characteristics. The addition of these subscales further strengthen the reliability and validity of online learning readiness surveys in capturing all the domains of student online readiness.

Introduction

Online education programs continue to receive mixed reviews from both faculty and students despite the yearly increase in enrollment (Allen & Seaman, 2013). At the postsecondary level, the growth of online courses has surpassed that of traditional face-to-face enrollment at a conservative rate of 10% (Allen & Seaman, 2008; Jaschik, 2009; Lokken, 2009). Currently, there is no consensus on one term for this mode of teaching or learning. The variations include distance education, online classes, and online learning. In addition, these type of learning or teaching is sometimes structured as hybrid; incorporating a synchronous, with some asynchronous meetings.

Among the benefits often postulated by online education advocates and researchers are that online education offers flexibility, affordability, and portability; especially to a population who otherwise would not get the opportunity to earn a degree (Carr, 2000; Mayes, Luebeck, Ku, Akarasriworn, & Korkmaz, 2011). This population includes adult learners, working parents, stay-at-home parents, veterans, and disabled individuals. What is of most concern, however, is the unaddressed problem of retention rates of online learners. Compared to traditional face-to-face learning, the attrition rate for online learning is higher (Carr, 2000; Moody, 2004; Willging & Johnson, 2004). For example, Lee & Choi (2011) reported that compared to face-to-face learning, the retention rates for online learning is 10% to 25% less. Smith (2010) also indicated that in total, 40% to 80% of online students tend to drop from online classes. A review of the extant literature identified specific factors that contribute to students’ persistence in any academic program (Dray et al., 2011; Hart, 2012; Tinto, 1975, 1993). Tinto’s (1975) Student Integration Model is usually adapted as the theoretical framework for online readiness studies. Specifically, student engagement is identified as the key to eliminating, or at least reducing, low achievement, student alienation, and high dropout rates (Fredricks, Blumenfeld, & Paris, 2004)In terms of online learning, Yu and Richardson (2015) have documented studies that link learner outcomes or satisfaction to these factors. In summary, one crucial factor that cannot be ignored in any discourse on online attrition rate is students’ readiness for online learning (Davis, 2010; Miller, 2005; Plata, 2013; Yu & Richardson, 2015).

Some of the notable studies on this subject (Kerr, Rynearson & Kerr, 2006; McVay, 2003; Smith, 2005) have called for online education programs to put in place an initial assessment to identify those who have previous online experience and those who are familiar with the use of telecommunication technology. These practices are aimed at determining the probability of students completing their online education. A number of the online education programs, such as Pennsylvania State University, University of North Carolina at Chapel Hill, University of Houston, Florida Gulf Coast University, and Northern Illinois University, have provided self-assessment on their websites for incoming students to appraise their current skills and abilities. McVay (2001a) also reported that some programs, such as the University of California - Los Angeles and The State University of New York, publish these learner readiness surveys for prospective applicants to self-assess and compare themselves to these results.

A foundational study on readiness (Warner, Christie, & Choy, 1998) identified three aspects of the concept of readiness. These three aspects as mentioned in Hung, Chou, Chen, and Own (2010) are:

“(1) students’ preferences for the form of delivery as opposed to face-to-face classroom instruction; (2) student confidence in using electronic communication for learning and in particular competence and confidence in the use of internet and computer-mediated communication and (3) ability to engage in autonomous learning” (p.1081).

Based on these, subsequent studies including Mattice and Dixon (1999) and McVay (2000, 2001a, 2003) have designed and created items to measure these dimensions of online readiness. The common themes emerging from these studies center on ‘learner characteristics’, ‘computer skills’, and ‘self-management of the process.’ Dray et al. (2011) tested a two-factor model, a three-factor model, and a five-factor model with a 39-item instrument on these themes. A review of literature on previous studies on online readiness resulted in a comprehensive list of studies with their corresponding measures presented in Table 1.

Over the years, instruments on online education have fallen short of meeting the current online learning environment as well as measuring the social context of the learner and other significant success factors such as motivation and self-efficacy (Yu & Richardson, 2015). There is no doubt that these measures focused on assessing different components of online education or had insufficient items to measure all the aspects of online education. Some of the studies focused on measuring whether students have the resources to take an online course. It is fair to say, however, that having the resources does not equate to using them for academic purposes. While some studies focused solely on assessing learning styles, other studies ignored the need for assessing writing competencies and learners’ inclination towards learning completely online. While some studies used only a population of online learners, others aim at identifying the characteristics of successful online learners a posteriori.

Currently, no single instrument on online education has addressed all these gaps in assessing online readiness. Even though there is no consensus on the specific number of underlying theoretical constructs to be included in any instrument to succinctly measure online readiness, the retention rate of online learners continues to decrease (Ali & Leeds, 2009; Lee & Choi, 2011). A clarion call has therefore been made by researchers, educators, and policymakers to attempt measuring students’ readiness for online education as an important and necessary step (Yu & Richardson, 2015). This study attempts to critically review previous online readiness assessments and develop an instrument that can assess online readiness of students in post-secondary education.

Method

Participants

Participants in this study included undergraduate students taking lower level psychology courses at a University in the Southeastern region of the United States. A total of 104 participants (N = 104) took part in the study. Their majors were Psychology, Social work, Criminal justice, Pre-nursing, Teacher education, Business, and Family and consumer sciences. A total of 84.6 percent of participants were female (n = 88) while 15.4 percent were male (n = 16). Their ages ranged from 18 to 67 where the median age was 20 years of age.

Instrument

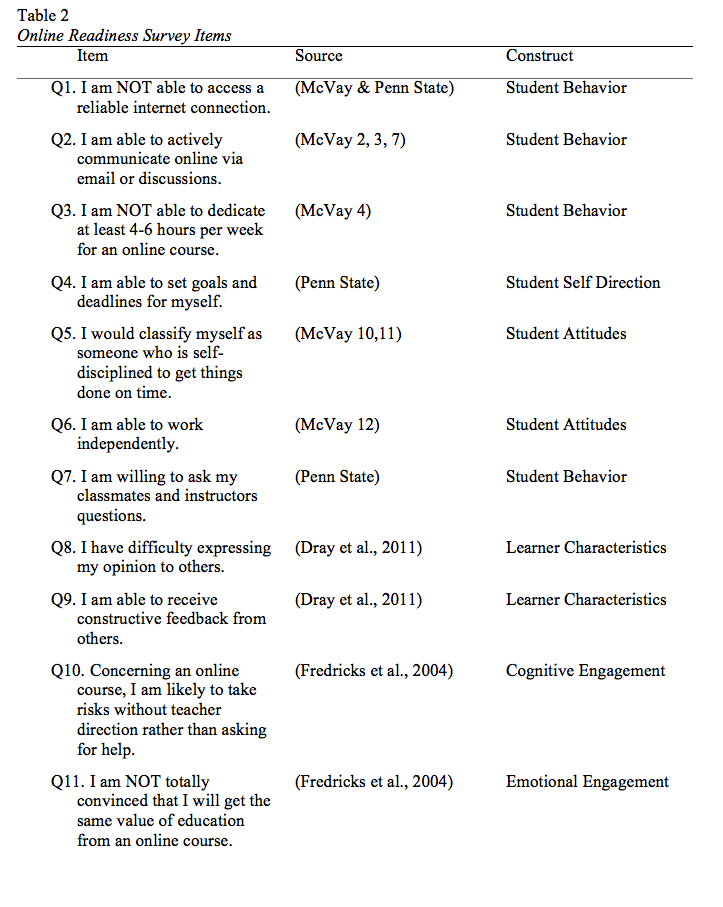

An extensive review of online readiness surveys was conducted and items used to measure the construct were extracted, followed by multiple rounds of discussion on item refining to provide simple items devoid of any ambiguity. A critical systematic review (cognitive testing), as suggested by Fowler (2009), where the items were evaluated in terms of how consistent the items were understood was also carried out by a graduate student. Faculty from Deaf Studies/Deaf Education and Psychology took part in reviewing items used in previous studies. The intended constructs were measured and recommendations of the authors were considered in this process. The process of ensuring content and face validity led to the development of items for the current instrument, as recommended by Trochim (2006). This approach was adapted instead of selecting entire scales or sub scales from previous studies in keeping up-to-date with the field of online learning. It also ensures that there is current justification for including an item devoid of biases and random error in previous studies. As a result, the team of subject matter experts included items in the current instrument that captured ICT engagement as well as intention to take an online course. These are believed to relate to attrition rates of students taking online classes (Dray et al., 2011; Tinto, 1993). The additions are expected to improve upon previous studies that usually revolve around two subscales including learner characteristics and technology characteristics (Dray et al., 2011). McVay (2000) also identified two factors as potential predictors to online learner readiness which include students’ behaviors and attitudes. Undergraduate students in a research methods class were used for the pilot study. There was a total of 27 participants (n = 27). One participant’s data was however excluded from the analysis because the student was under the age of 18, providing a final n of 26. Their ages ranged from 18- 40 years. There were 20 females and 7 males. All but 5 students were currently working. Their majors were Psychology, Pre-Nursing, Criminal Justice, Family and Consumer Sciences, and Business. In terms of taking online classes, 92% (n=24) of the students had previously taken an online class. After completing the survey, participants discussed each item in terms of wording, meaning, and the relationship to measuring online readiness. The participants were also asked if any questions could be reworded and if the items measured a different construct. The complete list of the 16 items (some reworded) were traced back to their particular study. See Appendix A (Table 2) for the resulting assessment instrument developed and used in this study.

Reliability and validity

Reliability and validity analyses were carried out after reverse scoring negatively worded items. Internal consistency of the items using Cronbach’s alpha was conducted to determine the extent to which individuals responded to the items in measuring the same construct (online readiness). A Cronbach’s alpha of .70 or above is usually considered acceptable (Leary, 2004). The correlations between each item and the total scale scores were also performed. To explore whether the items were producing a unidimensional construct, or if the items reflected two, three, or more common clusters (subscales) identified among existing instruments (Dray et al., 2011; Hung et al., 2010; McVay, 2000, 2003; Warner et al., 1998; Yu & Richardson, 2015), an exploratory factor analysis (EFA) was conducted. This approach was chosen instead of a confirmatory factor analysis (CFA) because the literature is inconclusive regarding the number of underlying theoretical constructs of online readiness. Exploratory factor analysis is usually used when there is no consensus on the dimensions underlying the construct (online readiness), to identify items that do not relate with other items, thus improving the reliability of the instrument (Netemeyer, Bearden & Sharma, 2003). This approach, however, falls short of making any causal inferences or testing any hypotheses.

Data collection

Students were asked to participate in the study after institutional review board approval was given. Participants were first given a consent form to sign. The consent form provided information about the nature of the study, including a questionnaire that participants would complete relating to online readiness. Once individuals signed the consent form and agreed to participate, they were given the online readiness survey. The survey asked respondents to rate the questions, based on how they reflect the individual, using a Likert-type scale ranging from 1 (Very untrue of me) to 7 (Very true of me). Demographic items were also used to gain more information about the research participants. The demographic items included questions about gender, age, employment status, intention to take an online course, and other questions related to experience with and access to technology. Participants were asked to answer the questions as accurately as possible.

Results

The results showed the scale to be reliable (16 items; α = .723). The participants tended to respond the same way to each item in measuring their online readiness. If two items (Item 10 and item 15) were deleted, it would increase Cronbach’s alpha to .758 and .753 respectively. The item to total scale score correlations were therefore conducted to reveal if these two items were correlated with the total score in the same way as the rest of the items. The results showed that these two items (item 10 and item 15) were the only items that were not significantly correlated with the total score. Item 10 and 15 were therefore deleted resulting in a 14-item scale (α = .788).

Factor Analysis

The factorability of the items was also examined. The Kaiser-Meyer-Olkin measure of sampling adequacy was .75, above the commonly recommended value of .6, and Bartlett’s test of sphericity was significant (χ2 (91) = 422.72, p < .001). In addition, the diagonals of the anti-image correlation matrix were all above .5 except item 11 (.45). The communalities were also above .2 indicating that each item shared some common variance with the other items in the scale and thus can be factor analyzed. Looking at the Kaiser Criterion (eigenvalue>1) and the scree plot, the optimal solution has four factors explaining a total variance of 59% with Factor 1 explaining a total variance of 31%.

When the items were rotated using Varimax to maximize high item loading, a four factor structure is possible (see Table 3). However, there were at least two cross loadings above .3. There is also a factor with only one item above the recommended factor loading of .4.

These results suggest that the scale with items 10 and 15 deleted would produce a multidimensional structure (subscales) of online readiness. Table 4 shows the structure with the corresponding items. These dimensions corresponded with previous items identified by previous studies. It is, however, recommendable to conduct a confirmatory factor analysis study without the problematic items (item 10 and 15) to test for a four factor solution.

Discussions and Recommendations

As a result of the exploratory factor analysis (EFA), a four-factor structure of the student online readiness instrument explained 59% of the variance among the items with Factor I explaining 31% of the variance. Two items were removed from the scale resulting in the final questionnaire of 14 items which had moderately strong reliability (Cronbach's ? = .788). The four factors identified in this study (self-directed learning, self-efficacy, digital engagement, and motivation) after comparing the items that loaded on each respective factor share some similarity with previous online learning instruments. For example, the present study confirms a four-factor structure for the construct of online readiness as recently identified by Yu and Richardson (2015). However, it should be noted that some of the items tended to cross-load on one other factor. This suggests that these items may need to be reworded to ensure that they load highly on only one factor and have near negligible loadings on all other factors. Regardless, the current online readiness instrument can be used to gather information concerning current and future students' online learning readiness by measuring the competencies defined by Dray et al. (2011), McVey (2000), and Tinto (1993) including ICT engagement, intention to take an online course, learner characteristics, and technology characteristics. It is also possible that other learning characteristics of distance learners exist that affect successful learning outcomes or overall satisfaction with online education preventing attrition from online courses.

The overarching goal of the present study in revising and improving on existing online learning readiness instruments is to increase retention rates in online courses. The current instrument expands on the competencies necessary for student success in online learning (e.g., motivation and self-efficacy) and provides support for the types of psychometric properties that should be measured to better understand students' online learning readiness. This could allow learners to develop their competencies and avoid challenges that would prevent them from succeeding in online learning (Yu & Richardson, 2015; Zawacki-Richter, 2004). Since educators are often not familiar with how to measure the competencies mentioned above, the current student online learning readiness instrument with 14 items should facilitate the process and allow students to be assessed before they take an online course or enroll in a digital education program. Interventions and/or institutional support would then be provided to students who are not ready for online learning. This, in turn, could lead to an improvement in online course attrition rates thus enhancing meaningful learning experiences and online education as a whole.

The current study was not without limitations. Although students were asked if they had previous experience with taking online courses, the researchers did not explicitly verify this data from the academic institution. In addition, no attempts were made to compare the competencies of students who were currently enrolled in an online course to those who have not taken any online course. It is possible these differences may have affected the results. Secondly, the deleted item 10 (Concerning an online course, I am likely to take risks without teacher direction rather than asking for help) and item 15 (My use of technology is mostly for non-academic purposes) which were designed to assess cognitive engagement and student behavior respectively, could have been answered in a socially desirable way. Students' perception of risks as an online learner may need to be reassessed in future studies. It is also possible that students were not able to separate the use of technology for academic and non-academic purposes. Future studies should consider assessing this behavior of students with multiple items. Moreover, Exploratory Factor Analysis (EFA) was used to examine the construct validity of the current instrument which did not allow testing of any theoretical model. It is recommended that future studies conduct a Confirmatory Factor Analysis (CFA) to further the knowledge in online learning readiness and test the predictive validity of the current instrument or existing online readiness instruments with a four factor model.

References

Ali, R., & Leeds, E. (2009). The impact of classroom orientation in online student retention. Online Journal of Distance Learning Administration, 12(4). Retrieved from https://www.westga.edu/~distance/ojdla/winter124/ali124.html.

Allen, I. E., & Seaman, J. (2013). Changing course: Ten years of tracking online education in the United States. Wellesley MA: Babson College/Quahog Research Group.

Allen, I. E., & Seaman, J. (2008). Staying the course: Online education in the United States, 2008. Needham, MA: Sloan-C. Retrieved from http://www.onlinelearningsurvey.com/reports/staying-the-course.pdf. https://onlinelearningconsortium.org/survey_report/2008-staying-course-online-education-united-states/

Atkinson, J. K., Blankenship, R., & Bourassa, G. (2012). College student online learning readiness of students in China. China-USA Business Review, (3), 438-445.

Atkinson, J. K., Blankenship, R., & Droege, S. (2011). College student online learning readiness: A comparison of students in the U. S. A., Australia and China. International Journal of Global Management Studies Professional, 3(1), 62-75.

Bernard, R. M., Brauer, A., Abrami, P. C., & Surkes, M. (2004). The development of a questionnaire for predicting online learning achievement. Distance Education, 25(1), 31-47.

Bryant, J., & Adkins, (2013). National research report: Online student readiness and satisfaction within subpopulations. Cedar Rapids, IA: Noel-Levitz, LLC.

Carr, S. (2000). As distance education comes of age, the challenge is keeping the students. The Chronicle of Higher Education, 46(23), A39–A41. Retrieved from http://www.chronicle.com/article/As-Distance-Education-Comes-of/14334

Davis, T. (2010). Assessing online learning readiness: Perceptions of distance learning stakeholdersin three Oklahoma community colleges. LAP Lambert Academic Publishing.

Dray, B. J., Lowenthal, P. R., Miszkiewicz, M. J., Ruiz-Primo, M. A., & Marczynski, K. (2011). Developing an instrument to assess student readiness for online learning: A validation study. Distance Education, 32(1), 29-47.

Fredricks, J. A., Blumenfeld, P. C., & Paris, A. (2004). School engagement: Potential of the concept: State of the evidence. Review of Educational Research, 74, 59–119. doi: 10.3102/00346543074001059.

Fowler, F. J. (2009). Survey research methods (4th ed.). Thousand Oaks, CA: Sage.

Hart, C. (2012). Factors associated with student persistence in an online program of study: A review of the literature. Journal of Interactive Online Learning, 11(1),19-42.

Hung, M. L., Chou, C., Chen, C. H., & Own, Z. Y. (2010). Learner readiness for online learning: Scale development and student perceptions. Computers & Education, 55(3), 1080-1090.

Ilgaz, H., & Gülbahar, Y. (2015). A snapshot of online learners: E-readiness, e-satisfaction and expectations. International Review of Research in Open and Distributed Learning, 16(2), 171-187.

Jaschik, S. (2009, April 6). Rise in distance enrollments. Inside Higher Ed. Retrieved from http://www.insidehighered.com/news/2009/04/06/distance

Kerr, M. S., Rynearson, K., & Kerr, M. C. (2006). Student characteristics for online learning success. The Internet and Higher Education, 9(2), 91-105.

Leary, M. R. (2004). Introduction to behavioral research methods (4th ed.). Boston: Pearson Education, Inc.

Lee, Y., & Choi, J. (2011). A review of online course dropout research: implications for practice and future research. Educational Technology Research and Development, 59, 593-618.

Lokken, F. (2009). Distance education survey results: Tracking the impact of eLearning at community colleges. Washington, DC: Instructional Technology Council.

Retrieved from http://www.itcnetwork.org/file.php?file=/1/ITCAnnualSurveyMarch2009Final.pdf.

Mattice, N. J., & Dixon, P. S. (1999). Student preparedness for distance education. Santa Clarita, CA: College of the Canyons. (ERIC Document Reproduction Service No. ED436216).

Mayes, R., Luebeck, J., Ku, H., Akarasriworn, C., & Korkmaz, ?. (2011). Themes and strategies for transformative online instruction: A review of literature and practice. Quarterly Review of Distance Education, 12(3), 151-166.

McVay, L. M. (2000). Developing a web-based distance student orientation to enhance student success in an online bachelor’s degree completion program (Doctoral dissertation, Nova Southeastern University). Retrieved from http://technologysource.org/article/effective_student_preparation_for_online_learning/

McVay, L. M. (2001a). How to be a successful distance education student: Learning on the internet. New York: Prentice Hall.

McVay, L. M. (2001b). Effective student preparation for online learning. The Technology Source, November/December 2001, 1-16. Retrieved from http://technologysource.org/article/effective_student_preparation_for_online_learning/.

McVay, L. M. (2003). Developing an effective online orientation course to prepare students for success in a web-based learning environment. In S. Reisman, J. G. Flores & D. Edge (Eds.), Electronic learning communities: Issues and practices (pp. 367-411). Greenwich, CT: Information Age Publishing.

Miller, R. (2005). Listen to the students: A qualitative study investigating adult student readiness for online learning. Proceedings of the 2005 National AAAE Research Conference (pp. 441-453), San Antonio, TX. Retrieved from http://aaaeonline.org/uploads/allconferences/668405.AAAEProceedings.pdf.

Moody, J. (2004). Distance education: Why are the attrition rates so high? Quarterly Reviewof Distance Education, 5(3), 205–210. Retrieved from http://www.infoagepub.com/Quarterly-Review-of-Distance-Education.html

Netemeyer, R. G., Bearden, W. O., & Sharma, S. (2003). Scaling procedures: issues and applications. Sage Publications, London

Panuwatwanich, K., & Stewart, A. R. (2012). Linking online learning readiness to the use of online learning tools: The case of postgraduate engineering students. Paper presented at Australasian Association for Engineering Education (AAEE) Annual Conference. Melbourne, Victoria, Australia.

Parnell, J. A., & Carraher, S. (2003). The management education by internet readiness (Mebir) scale: Developing a scale to assess personal readiness for internet-mediated management education. Journal of Management Education, 27 (4), 431–446. doi: 10.1177/1052562903252506.

Penn State (n.d.). Student self-assessment for online learning readiness. Retrieved from https://esurvey.tlt.psu.edu/Survey.aspx?s=246aa3a5c4b64bb386543eab834f8e75.

Pillay, H., Irving, K., & Tones, M. (2007). Validation of the diagnostic tool for assessing tertiary students’ readiness for online learning. High Education Research & Development, 26(2), 217-234.

Plata, I. (2013). A proposed virtual learning environment (VLE) for Isabela State University (ISU). In the Second International Conference on e-Technologies and Networks for Development (pp. 28-41). The Society ofDigital Information and Wireless Communication. Retrieved from http://sdiwc.net/digital-library/aproposed-virtual-learning-environment-vle-for-isabela-state-university-isu.html.

Smith, B. G. (2010). E-learning technologies: A comparative study of adult learners enrolled on blended and online campuses engaging in a virtual classroom (Doctoral dissertation, CAPELLA UNIVERSITY).

Smith, P. J., Murphy, K. L., & Mahoney, S. E. (2003). Towards identifying factors underlying readiness for online learning: An exploratory study. Distance Education, 24(1), 57–67. doi:10.1080/01587910303043

Smith, T. C. (2005). Fifty-one competencies for online instruction. The Journal of Educators Online, 2(2), 1-18.

Tinto, V. (1975). Dropout from higher education: A theoretical synthesis of recent research. Review of educational Research, 45(1), 89-125.

Tinto, V. (1987). Leaving college: Rethinking the causes and cures of student attrition. Chicago, IL: The University of Chicago Press.

Tinto, V. (1993). Leaving college: Rethinking the causes and cures of student attrition. Chicago, IL: The University of Chicago Press.

Trochim, W. M. (2006). Research methods knowledge base (2nd ed.). Retrieved from http://www.socialresearchmethods.net/kb/.

Warner, D., Christie, G., & Choy, S. (1998). Research report: The Readiness of VET clients for flexible delivery including on-line learning. Brisbane: Australian National Training Authority.

Willging, P. A., & Johnson, S. D. (2004). Factors that influence students’ decision to dropout of online courses. Journal of Asynchronous Learning Networks, 8(4), 105–118. Retrieved from http://sloanconsortium.org/publications/jaln_main.

Yu T., & Richardson J. C. (2015). An exploratory factor analysis and reliability analysis of the student online learning readiness (SOLR) instrument. Journal of Asynchronous Learning Network 19(5):120-141.

Zawacki-Richter, O. (2004). The growing importance of support for learners and faculty in online distance education. In J. E. Brindley, C. Walti, & O. Zawacki-Richter (Eds.), Learner Support in Open, Distance and Online Learning Environment (pp. 51-62). Bibliotheks- und Informationssystem der Universität Oldenburg.

Appendix A

Online Journal of Distance Learning Administration, Volume XX, Number 1, Spring 2017

University of West Georgia, Distance Education Center

Back

to the Online Journal of Distance Learning Administration Contents